High-detailed

It captures complex cloth deformation, and intricate texture details, like delicate satin ribbon and special headwear.

High-quality human reconstruction and photo-realistic rendering of a dynamic scene is a long-standing problem in computer vision and graphics. Despite considerable efforts invested in developing various capture systems and reconstruction algorithms, recent advancements still struggle with loose or oversized clothing and overly complex poses. In part, this is due to the challenges of acquiring high-quality human datasets.

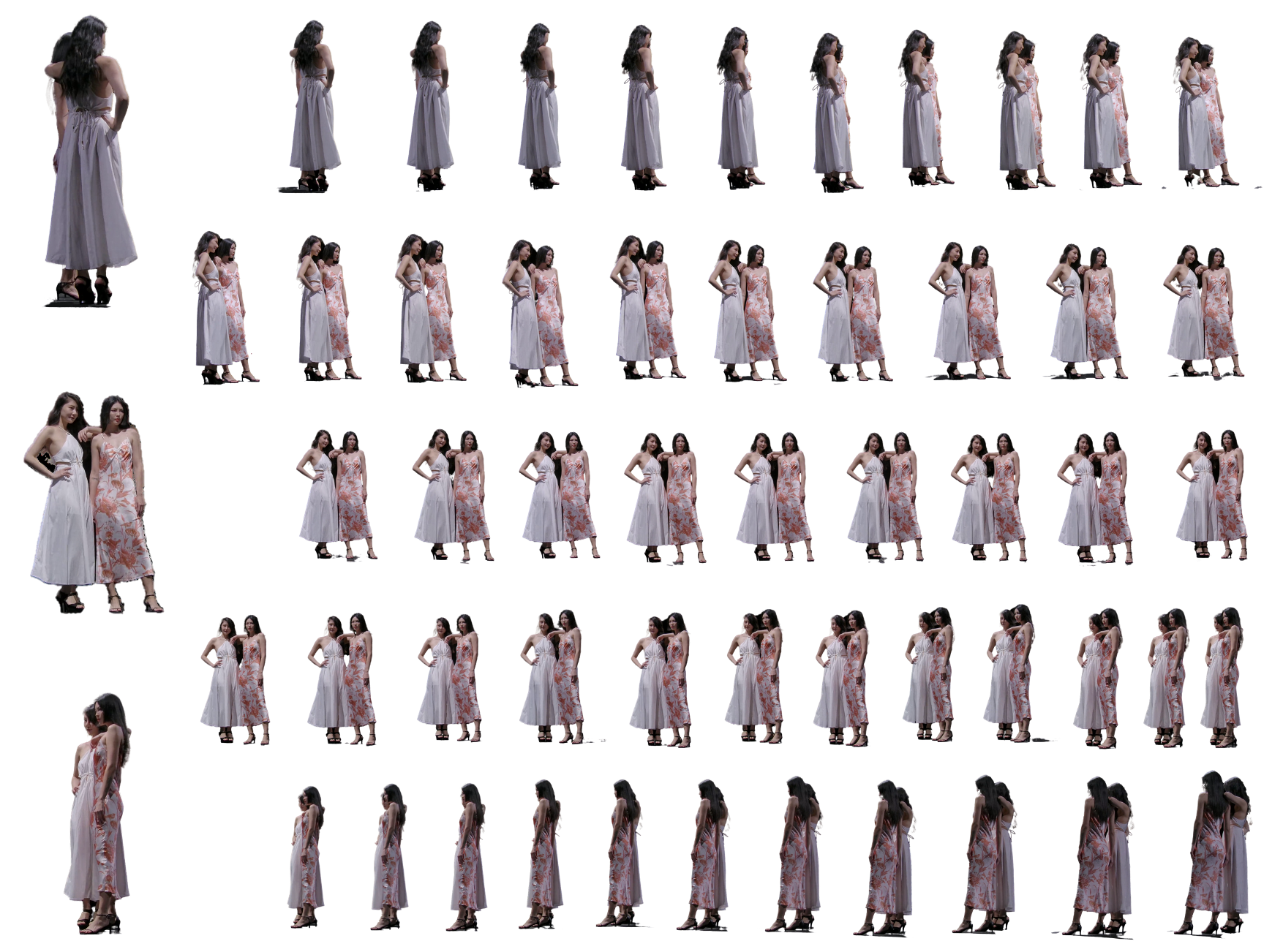

To facilitate the development of these fields, in this paper, we present PKU-DyMVHumans, a versatile human-centric dataset for high-fidelity reconstruction and rendering of dynamic human scenarios from dense multi-view videos. It comprises 8.2 million frames captured by more than 56 synchronized cameras across diverse scenarios. These sequences comprise 32 human subjects across 45 different scenarios, each with a high-detailed appearance and realistic human motion.

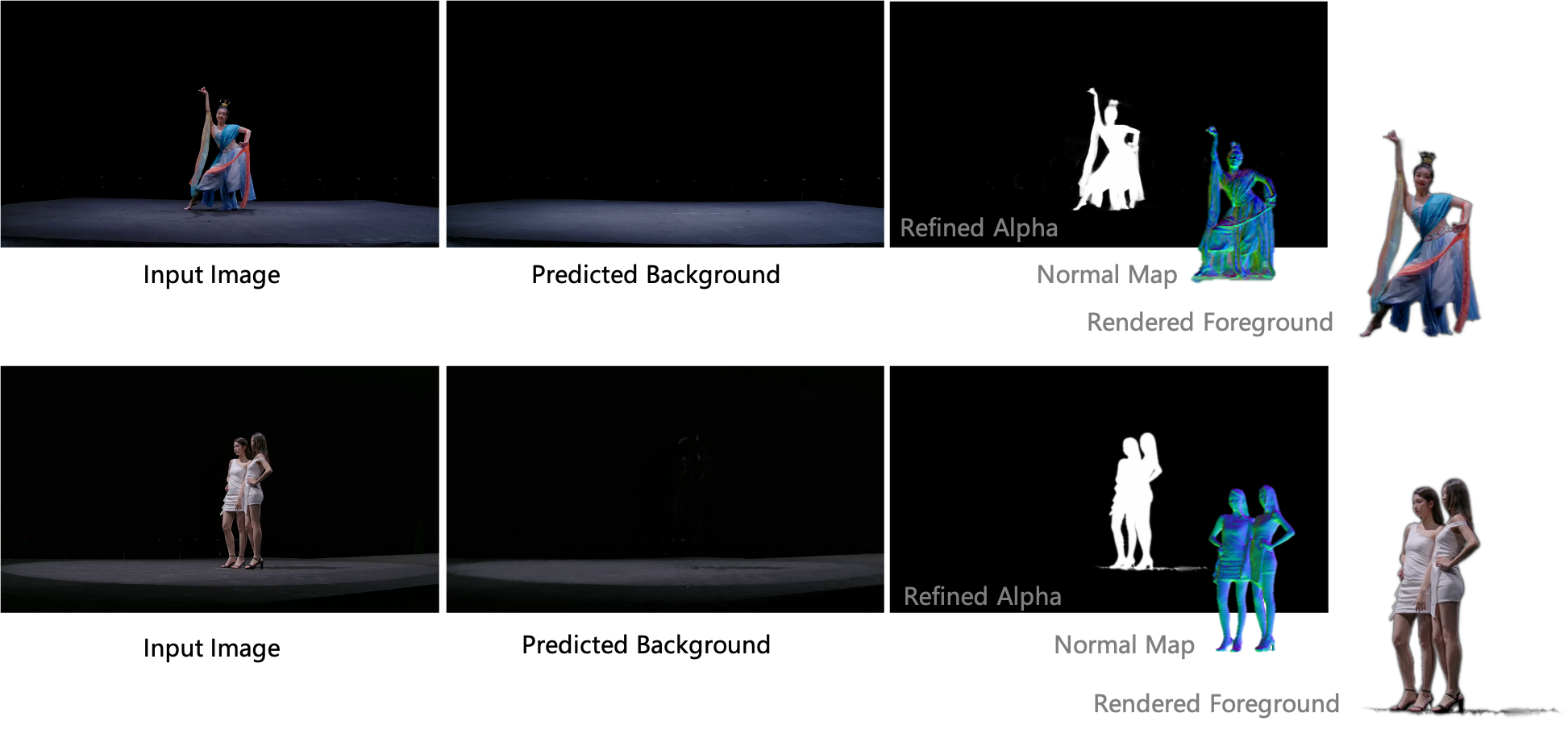

Inspired by recent advancements in neural radiance field (NeRF)-based scene representations, we carefully set up an off-the-shelf framework that is easy to provide those state-of-the-art NeRF-based implementations and benchmark on PKU-DyMVHumans dataset. It is paving the way for various applications like fine-grained foreground/background decomposition, high-quality human reconstruction and photo-realistic novel view synthesis of a dynamic scene.

Extensive studies are performed on the benchmark, demonstrating new observations and challenges that emerge from using such high-fidelity dynamic data.

It captures complex cloth deformation, and intricate texture details, like delicate satin ribbon and special headwear.

It covers a wide range of special costume performances, artistic movements, and sports activities.

It includes human-object interactions, multi-person interactions and complex scene effects (like smoking).

We construct professional multi-view system to capture humans in motion, which contains 56/60 synchronous cameras with 1080p or 4K resolution.

The objective of our benchmark is to achieve robust geometry reconstruction and novel view synthesis for dynamic humans under markerless and fixed multi-view camera settings, while minimizing the need for manual annotation and reducing time costs. This includes neural scene decomposition, novel view synthesis, and dynamic human modeling.

We show that Surface-SOS can decompose scene into geometrically consistent foreground, texture-completed backgrounds, and generate convincing segmentation and normal maps.

Novel view synthesis from a sparse multi-view videos.

The results of space and time novel view rendering under short frame sequences.

@article{zheng2024PKU-DyMVHumans,

title={PKU-DyMVHumans: A Multi-View Video Benchmark for High-Fidelity Dynamic Human Modeling},

author={Zheng, Xiaoyun and Liao, Liwei and Li,Xufeng and Jiao, Jianbo and Wang, Rongjie and Gao, Feng and Wang, Shiqi and Wang, Ronggang},

journal={IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

year={2024} }